Introduction

Artificial Intelligence has been a transformative force in various sectors, from healthcare to finance, and from transportation to entertainment and it does not seem to slow down with recent developments in generative AI. Its advent has brought about a paradigm shift in how we approach problem-solving and decision-making, enabling us to tackle complex tasks with unprecedented efficiency and precision.

However, as AI models become increasingly complex, they also become increasingly difficult when it comes to tracing its decision-making process in particular cases. This opacity, often referred to as the 'black box’ problem, poses a significant challenge. It’s like having a brilliant team member who consistently delivers excellent results but cannot explain how they arrive at their conclusions. This lack of transparency can lead to mistrust and apprehension, particularly when the decisions made by these AI models have significant real-world implications. If artificial intelligence is to be used in drafting new laws or as a support for healthcare providers, it must provide not only the answer but also the path it took to reach particular conclusion.

However all is not lost, as the 'black box’ problem has led to the emergence of Explainable AI (XAI) – a field dedicated to making AI decision-making transparent and understandable to humans. XAI seeks to open the 'black box’ and shed light on the inner workings of AI models. This is not just about satisfying intellectual curiosity; it’s about trust, accountability, and control. As we delegate more decisions to AI, we need to ensure that these decisions are not only accurate but also fair, unbiased, and transparent.

The Technical Aspects of Explainable AI

Explainable AI is a broad and multifaceted field, encompassing a range of techniques and approaches aimed at making AI systems more understandable to humans. At its core, XAI seeks to answer questions like: Why did the AI system make a particular decision in particular case? What factors did it take into consideration? On what basis did it make that decision? How confident is it in its decision? It is important to mention that XAI is not about understanding general mechanics of AI, as those are well understood by data scientists, but rather about the way AI connects concepts and weights particular parameters in a particular case.

When it comes to this aspect of explainability, there are two main approaches: interpretable models and post-hoc explanations.

Interpretable models are designed to be inherently explainable. They are typically simple models whose decision-making process is transparent and easy to understand. For instance, decision trees and linear regression models. In a decision tree, the decision-making process is represented as a tree structure, where each node represents a decision based on a particular feature, and each branch represents the outcome of that decision. This makes it easy to trace the path of decision-making and understand why the model made a particular decision.

However, interpretable models often trade-off some level of predictive power for interpretability. In other words, while they are easy to understand, they may not always provide the most accurate predictions. This is particularly true for complex tasks that involve high-dimensional data or non-linear relationships, which are often better handled by more complex models.

On the other hand, post-hoc explanations are used for more complicated systems like neural networks, which offer high predictive power but are not inherently interpretable. These models are often likened to 'black boxes’ because their decision-making process is hidden within layers of computations that are difficult to interpret.

Post-hoc explanation techniques aim to 'open’ these black boxes and provide insights into their decision-making process by generating explanations after the model has made a prediction or an answer. Hence the term 'post-hoc’. They provide insights into which features were most influential in making a particular decision, allowing us to understand why the model made particular response.

There are several post-hoc explanation techniques, each with its strengths and weaknesses. For instance, LIME (Local Interpretable Model-Agnostic Explanations) is a technique that explains the predictions of any classifier by approximating it locally with an interpretable model. On the other hand, SHAP (SHapley Additive exPlanations) is a unified measure of feature importance that assigns each feature an importance value for a particular prediction.

These techniques have been instrumental in making complex AI models more transparent and understandable. However, they are not without their challenges. For instance, they often require significant computational resources, and their results can sometimes be sensitive to small changes in the input data. Moreover, while they provide valuable insights into the decision-making process of AI models, they do not necessarily make the models themselves more interpretable.

However, as you will see below the research into the realm of Explainable AI (XAI) is ongoing, and variety of advanced modeling methods, services, and tools have been developed to enhance the interpretability and transparency of AI systems.

Voice-based Conversational Recommender Systems

A study by Ma et al. (2023) explores the potential of voice-based conversational recommender systems (VCRSs) to revolutionize the way users interact with recommendation systems. These systems leverage natural language processing (NLP) and machine learning to generate human-like explanations of AI decisions, making AI more accessible and understandable to non-technical users. The researchers developed two VCRSs benchmark datasets in the e-commerce and movie domains and proposed potential solutions for building end-to-end VCRSs. The study aligns with the principles of explainable AI and AI for social good, utilizing technology’s potential to create a fair, sustainable, and just world. The corresponding open-source code can be found in the VCRS repository.

Tsetlin Machines for Recommendation Systems

A study by Sharma et al. (2022) compares the viability of Tsetlin Machines (TMs) with other machine learning models prevalent in the field of recommendation systems. TMs are a type of interpretable machine learning model that uses simple, understandable rules to make predictions. The authors demonstrate that TMs can provide comparable performance to deep neural networks while offering superior interpretability and scalability. The corresponding open-source code can be found in the Tsetlin Machine repository.

MLSquare: A Framework for Democratizing AI

A paper by Dhavala et al. (2020) introduces MLSquare, a Python framework designed to democratize AI by making it more accessible, affordable, and portable. The framework provides a single point of interface to a variety of machine learning solutions, facilitating the development and deployment of AI systems. The authors emphasize the importance of explainability, credibility, and fairness in democratizing AI, aligning with the principles of XAI. The corresponding open-source code can be found in the MLSquare repository.

It is worth mentioning that the above technologies represent just a fraction of the ongoing research and development efforts. As the field continues to evolve, we can expect to see even more innovative solutions aimed at enhancing the transparency and interpretability of AI systems, facilitating its use in more and more areas of our professional and private lives.

XAI in Practice: Case Studies and Business implications.

However, the technical and theoretical aspect of explainable AI is only part of the issue. After all the goal is not to create XAI just for the sake of intellectual curiosity, though that has value in itself, but also to create real-life applications and benefits. To illustrate, let’s look at a few case studies!

When it comes to artificial intelligence in the banking sector, JPMorgan Chase is using XAI to explain credit risk models to internal auditors and regulators. Credit risk models are complex AI models that predict the likelihood of a borrower defaulting on a loan. They play a crucial role in the bank’s decision-making process, influencing decisions on whether to approve a loan and at what interest rate. However, these models are typically 'black boxes’ that provide little insight into their decision-making process. By applying XAI techniques, JPMorgan Chase has been able to open these black boxes and provide clear, understandable explanations of their credit risk models. This has not only increased trust in these models and allowed for their optimization and adaptation to changing market environments but also helped the bank meet regulatory requirements.

In the field of healthcare, companies like PathAI are using XAI to provide interpretable AI-powered pathology analyses. Pathology involves the study of disease, and pathologists play a crucial role in diagnosing and treating a wide range of conditions. However, pathology is a complex field that requires a high level of expertise and experience as well as ability to parse and recall enormous amount of information. AI has the potential to assist pathologists by automating some of their tasks and improving the accuracy of their diagnoses. However, for doctors to trust and use these AI systems, they need to understand how they are making their diagnoses. By applying XAI techniques, PathAI has been able to provide clear, understandable explanations of their AI diagnoses, helping doctors understand and trust their AI systems. The key part here is healthcare professionals’ ability to check and verify answers provided by AI, which allows for easier and faster diagnostics while not compromising their accuracy and ability to assign responsibility for possible mistakes.

These case studies illustrate the power and potential of XAI. By making AI systems more transparent and understandable, XAI is not only building trust in AI but also enabling its more effective and responsible use. The Paper „Deep Learning in Business Analytics: A Clash of Expectations and Reality” by Marc Andreas Schmitt points out that one of the possible reasons for slower than expected adoption of Deep Learning in business analytics is lack of transparency and Black-Box problem, which makes it harder to build trust with both business users and stakeholders. XAI is an obvious way to solve this problem and open the way for faster and more efficient data transformations and data maturity in Enterprise Scale organizations.

The implications of XAI are far-reaching and have the potential to revolutionize how businesses operate. In sectors like finance and healthcare, where decision transparency is crucial, XAI can help build trust and meet regulatory requirements. By understanding how an AI model is making decisions, businesses can better manage risks and make more informed strategic decisions without exposing themselves to blindly trusting AI which can still make mistakes easily prevented through human oversight.

Moreover, XAI can also lead to improved model performance. By understanding how a model is making decisions, data scientists can identify and correct biases or errors in the model, leading to more accurate and fair predictions. For instance, a study by Carvalho et al. (2019) demonstrated that using XAI techniques to understand and refine a machine learning model led to a 5% improvement in prediction accuracy.

Beyond the aforementioned benefits, XAI can also foster innovation and drive business growth. By providing insights into how AI models make decisions, XAI can help businesses identify new opportunities and strategies. For instance, by understanding which features are most influential in a customer churn prediction model, a business can identify key areas for improving customer retention and develop targeted strategies accordingly.

Furthermore, XAI can also enhance collaboration between technical and non-technical teams within a business. By making AI understandable to non-technical stakeholders, XAI can facilitate more informed and inclusive discussions around AI strategy and implementation. This can lead to better decision-making and more effective use of AI across the business in general.

Future Trends in Explainable AI

As we look towards the future, several emerging trends in XAI are poised to shape the landscape of AI transparency and interpretability. These trends are driven by ongoing research and development efforts, as well as the evolving needs and expectations of various stakeholders, including businesses, regulators, and end-users.

One significant trend is the development of hybrid models that combine the predictive power of complex models with the interpretability of simpler ones. These hybrid models aim to offer the best of both worlds: high predictive accuracy and interpretability. This approach is particularly promising for applications where both accuracy and transparency are critical, such as healthcare and finance. For instance, a study by Sajja et al. (2020) demonstrated the effectiveness of using XAI in the fashion retail industry to facilitate collaborative decision-making among stakeholders with competing goals.

Another exciting area of development is the use of natural language processing (NLP) to generate human-like explanations of AI decisions. By translating complex AI decisions into clear, understandable language, NLP can make AI even more accessible and understandable to non-technical users. This approach could democratize AI, enabling more people to leverage its benefits and contribute to its development. A study by Duell (2021) highlighted the potential of using XAI methods to support ML predictions and human-expert opinion in the context of high-dimensional electronic health records.

Moreover, as AI continues to evolve, we can expect to see new forms of explainability emerging. For instance, visual explainability, which uses visualizations to explain AI decisions, is an emerging field that could provide even more intuitive and accessible explanations of AI. This approach could be particularly effective for explaining AI decisions in fields like image recognition and computer vision, where visual cues play a crucial role.

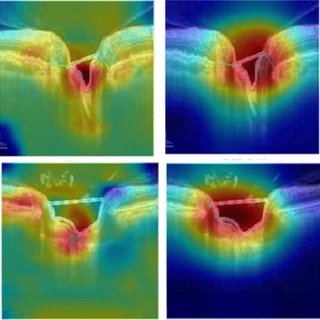

One example of such is Grad-CAM, or Gradient-weighted Class Activation Mapping. A technique for making Convolutional Neural Networks (CNNs) more interpretable and transparent. It was proposed by Selvaraju et al. and has since been widely adopted in the field of Explainable AI.

Grad-CAM works by generating a heatmap for a given input image, highlighting the important regions that the CNN focuses on for a particular output class. This is achieved by calculating the gradient of the output class score with respect to the final convolutional layer activations. The resulting gradient weight map indicates the importance of each activation, which is then multiplied with the activation map to generate the Grad-CAM heatmap. This heatmap can then be upscaled and overlaid on the input image to provide a visual explanation of the CNN’s decision-making process.

The GradCAM heatmaps for VGG16, ResNet18 and proposed DL model (left to right) obtained from segmented OCT images of glaucomatous eyes (left).

The GradCAM heatmaps for VGG16, ResNet18 and proposed DL model (left to right) obtained from segmented OCT images of glaucomatous eyes (left).

The Grad-CAM process is based on several steps such as:

The Grad-CAM technique offers several key advantages as it operates as a post-hoc method, meaning it can be applied to any pre-trained CNN model without the need for retraining. Additionally, it can explain CNN predictions at different levels of granularity by using convolutional layers at different depths as well as highlight both class-discriminative and class-agnostic regions, providing a holistic understanding of the CNN’s reasoning process.

In the context of visual explainability, Grad-CAM represents a significant step forward. By highlighting the areas of an image that most influence a network’s decision, it provides valuable insights into how certain layers of the network learn and what features of the image influenced the decision.

However it is worth mentioning that as a study by Pi (2023) pointed out, the future of XAI is not just about technical advancements. It’s also about governance and security. As AI becomes increasingly integrated into our lives and societies, ensuring the transparency and accountability of AI systems will become a critical aspect of algorithmic governance. This will require collaborative engagement from all stakeholders, including the public sector, enterprises, and international organizations.

Conclusion

Explainable AI is a rapidly evolving field that holds the promise of making AI more transparent, trustworthy, and effective. As we continue to rely on AI for critical decisions, the importance of understanding these systems will only grow. Through advancements in XAI, we can look forward to a future where AI not only augments human decision-making but also does so in a way that we can understand and trust.

As we move forward, it’s crucial that we continue to prioritize explainability in AI. This is not just about meeting regulatory requirements or building trust; it’s about ensuring that we maintain control over AI and use it in a way that aligns with our values and goals. By making AI explainable, we can ensure that it serves us, rather than the other way around.

Perhaps the best way to prevent Skynet from annihilating human race is not another Sarah Connor, but understanding and modifying its decision-making process to make it less homicidal.

All content in this blog is created exclusively by technical experts specializing in Data Consulting, Data Insight, Data Engineering, and Data Science. Our aim is purely educational, providing valuable insights without marketing intent.

(+48) 508 425 378

(+48) 508 425 378 office@bitpeak.pl

office@bitpeak.pl